Lifelong Robotic Vision

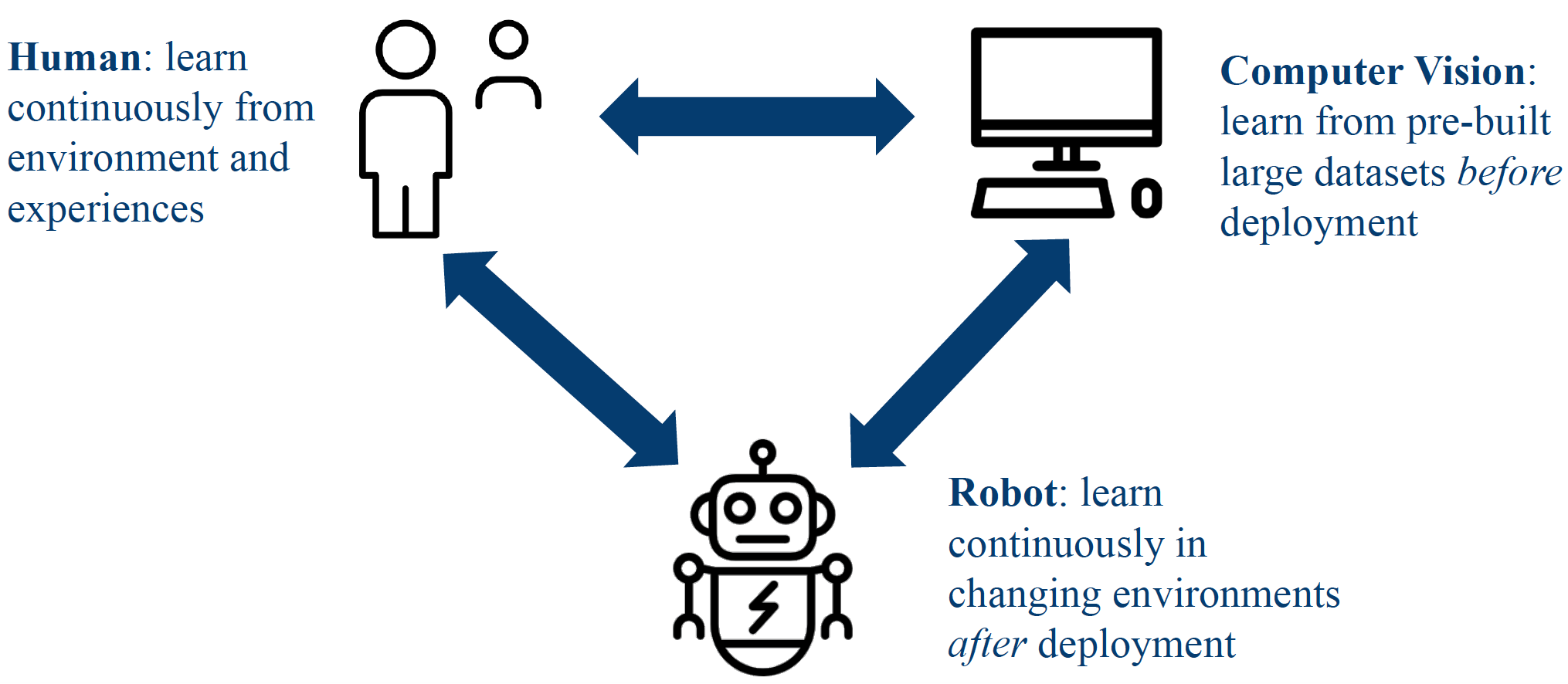

Humans have the remarkable ability to learn continuously from the external environment and the inner experience. One of the grand goals of robots is also building an artificial lifelong learning

agent that can shape a cultivated understanding of the world from the current scene and their previous knowledge via an autonomous lifelong development.

Recent advances in computer vision and deep learning techniques have been very impressive due to large-scale datasets, such as ImageNet, COCO, etc. The breakthroughs in object/person recognition, detection, and segmentation have heavily relied on the availability of these large representative datasets for training. However, robotic vision poses new challenges for applying visual algorithms developed from computer vision datasets due to their implicit assumption over non-varying distributions for a fixed set of categories and tasks. It is obvious that the semantic concepts of the real environment are dynamically changing over time. Specifically, in real scenarios, the robot operates continuously under open-set and sometimes detrimental conditions, which has the requirements for the lifelong learning capability with reliable uncertainty estimates and robust algorithm designs.

Providing a robotic vision dataset collected from the real time-varying environments can accelerate both research and applications of visual models for robotics!

Dataset and Competition

We will utilize the unique characteristics of robotics for enhancing robotic vision research by using additional high-resolution sensors (e.g. depth and point clouds), controlling the camera directions & numbers, and even shrinking the intense labeling effort with self-supervision. For accelerating the lifelong robotic vision research, we will provide robot sensor data (RGB-D, IMU, etc.) in several kinds of typical scenarios, like homes and offices, with multiple objects, persons, scenes, and ground-truth trajectory acquired from auxiliary measurements with high-resolution sensors. Not only the sensor information, scenarios, task types are highly diverse, but also our dataset embraces slow and fast dynamics in real life, which to our knowledge makes it the first real-world dataset under the lifelong robotic vision setting.

The major challenge for lifelong robotic vision is continuous understanding of a dynamic environment. In the level of objects, the robot should be able to learn new object models incrementally without forgetting previous objects. In the scene level, the robot should be able to incrementally update its world model without getting lost. Thus, we start from the particular research topics of lifelong object recognition and lifelong SLAM, provide benchmarks for both tasks, and organize competitions to accelerate related research. The first competition will be held online from July to October 2019, with a workshop-like event at IROS 2019 in Macau on November 4..

Vision and Expectation

Research outcomes. Research challenges or competitions should improve the state-of-the-arts by providing rich training/testing data and context information. Moreover, the realistic environments would enlighten the development of more practical and scalable learning methods. Our collected dataset should be able to provide potential modifications to the existing robotic vision contest that we believe will encourage these directions.

Improving Participation. The purpose of our collected dataset and organized challenge in research is to provide both an opportunity to exchange ideas as well as a venue to evaluate and encourage state-of-the-art research. Lifelong Robotic Vision challenge is to encourage the participation of machine learning, robotics and computer vision researchers. Below we discuss practical suggestions to increase researcher participation.

Organizers

Acknowledgement

Thanks the following collaborators for contributing to the dataset: Chunhao Zhu, Dongjiang Li, Dion Gavin Mascarenhas, Feng (Eric) Fan, Ke Ou, Kwunyu Wu, Qinbin Tian, Qihan (Jack) Yang, Qinbin Tian, Qiwei Long, Rong Hong, Yuxin Tian, Yiming Hu, and Zhigang Wang.

Thank Yingkai Liu for the great website template.

Further Reading

Join the Competition

For joining our IROS 2019 competition (either Lifelong Object Recognition or Lifelong SLAM tasks), please contact us via: qi.she@intel.com or xuesong.shi@intel.com

Analytics

If you are not seeing a map, please disable Ad block